We try to design our software to eliminate entire classes of bugs. State updates happen in transactions so they’re all or nothing. We favor immutable state and INSERTs over UPDATEs. We like simple, functional (or idempotent) code that is easy to debug and refactor.

Caching, by contrast, exposes you to new classes of bugs. That’s why we’re cache-weary: we’ll cache if we have to, but frankly, we’d rather not.

Downsides of caching:

- Introduces bugs

- Stale data

- Performance degradation goes unnoticed

- Increased cognitive load

- Other tail risks

Caching introduces an extra layer of complexity and that means potential for bugs. If you design your cache layers carefully (more about that later) this risk is reduced, but it’s still substantial. If you use memcached you can have bugs where the service is misconfigured and listens to a pubic IP address. Or maybe it binds on both IPv4 and IPv6 IPs, but only your IPv4 traffic is protected by a firewall. Maybe the max key size of 250 bytes causes errors, or worse, fails silently and catastrophically when one user gets another user’s data from the cache when key truncation results in a collision. Race conditions are another concern. Your database, provided you use transactions everywhere, normally protects you against them. If you try to write stale data back into the database your COMMIT will fail and roll back. If you mess up and write data from your cache to your database you have no such protection. You’ll write stale data and suffer the consequences. The bug won’t show up in low load situations and you’re unlikely to notice anything is wrong until it’s too late.

There are also some more benign problems caused by cache layers. The most common one is serving stale data to users. Think of a comment section on a blog where new comments don’t show up for 30 seconds, or maybe all 8 comments show up but the text above still says “Read 7 comments”. In another app you delete a file but when you go back you still see the file in your “Recents” and “Favorites”. I guess it’s easier to make software faster when you don’t care about correctness, but the user experience suffers.

Many other types of data duplication are also caching by another name. If you serve content from a CDN you have to deal with the same issues I touched on above. Is the data in sync? What if the CDN goes down? If content changes can you invalidate the CDN hosted replica instantly?

Maybe you decide to reduce load from your primary database server by directing simple select queries to read-only mirrors. It’s also a form of caching, and unsurprisingly you have to deal with the same set of problems. A database mirror will always lag behind the primary server. That can result in stale data. What if you try to authenticate against the mirror right after changing your password?

Caching layers can also obscure performance issues. An innocent patch can make a cheap function really slow to run, but when you cache the output you won’t notice. Until you restart your service and all traffic goes to a cold cache. Unable to deal with the uncached load the system melts down.

Things that used to be simple are complicated now. That’s really what it all boils down to. Caching layers complicate the architecture of your system. They introduce new vectors for serious security vulnerabilities, data loss, and more. If you don’t design your caching abstractions carefully you have to think about the caching implications every time you make simple changes or add simple features. That’s a big price to pay when the only benefit of caching is performance.

Alternatives to caching

- Buy faster hardware

- Write smarter algorithms

- Simplification

- Approximation

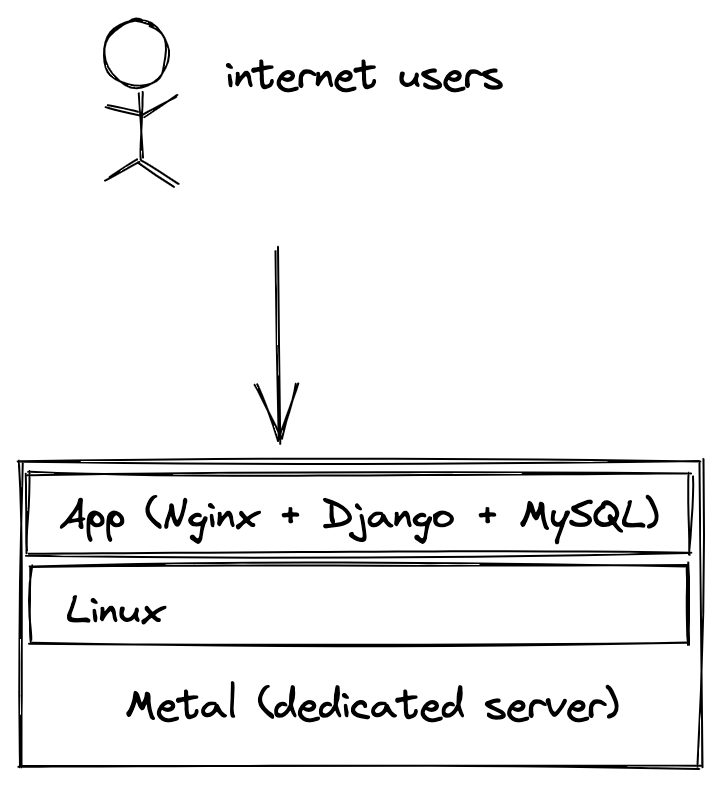

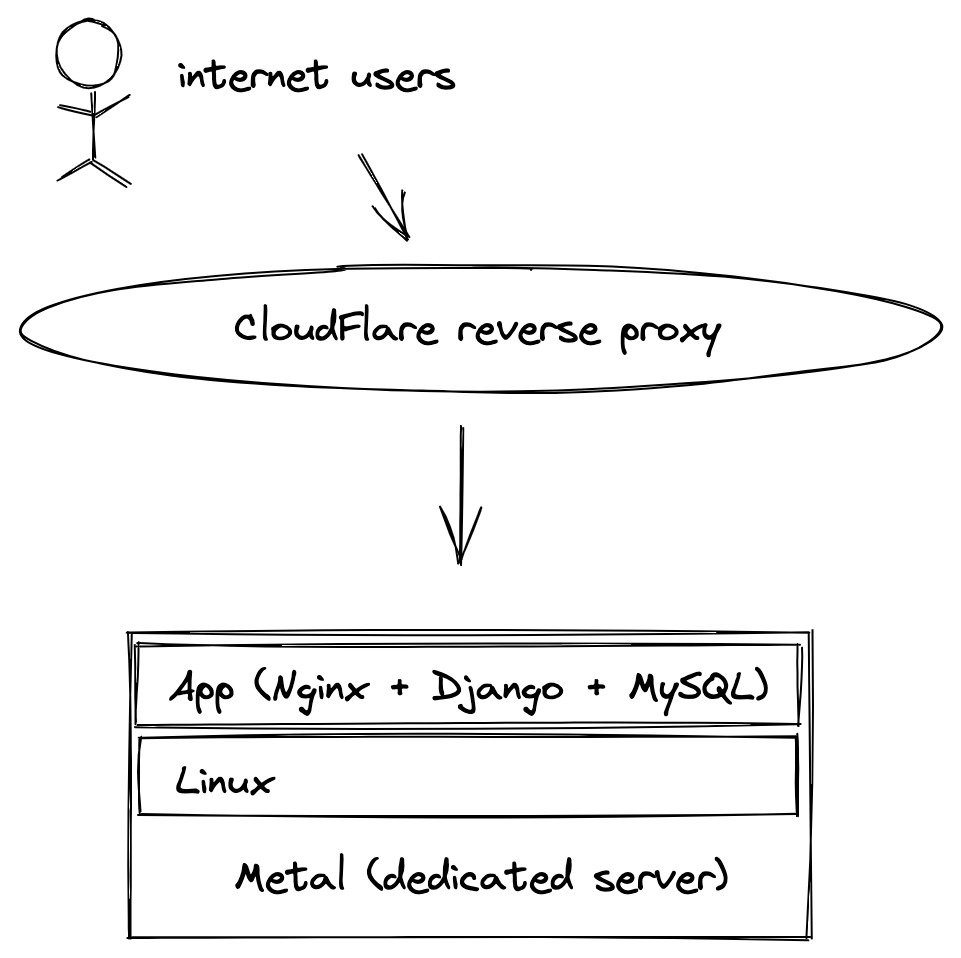

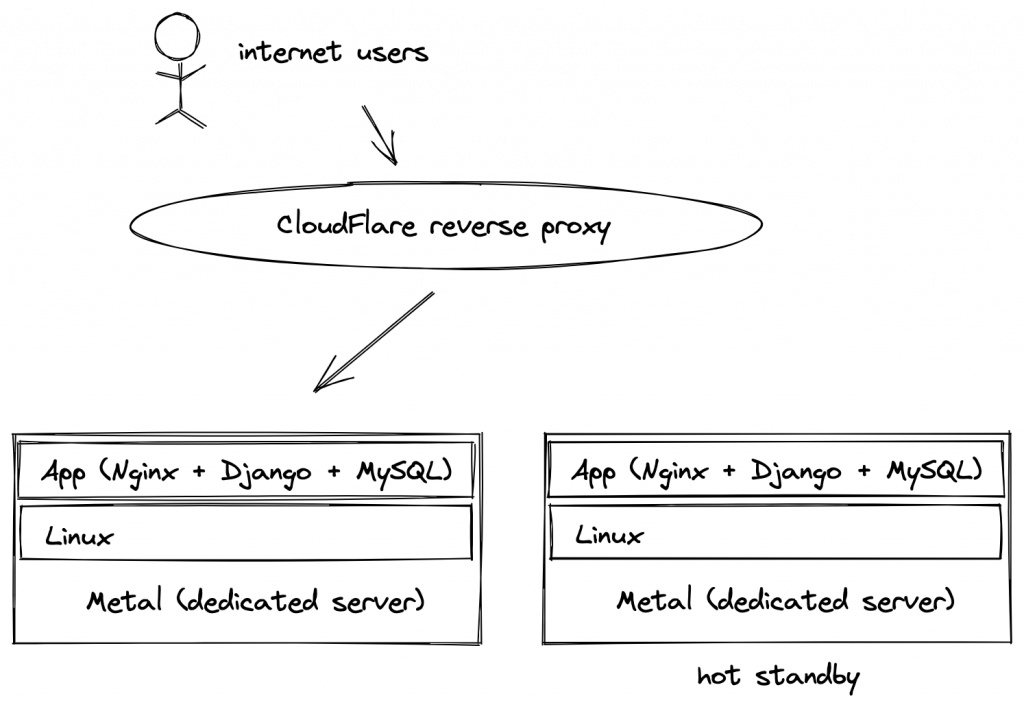

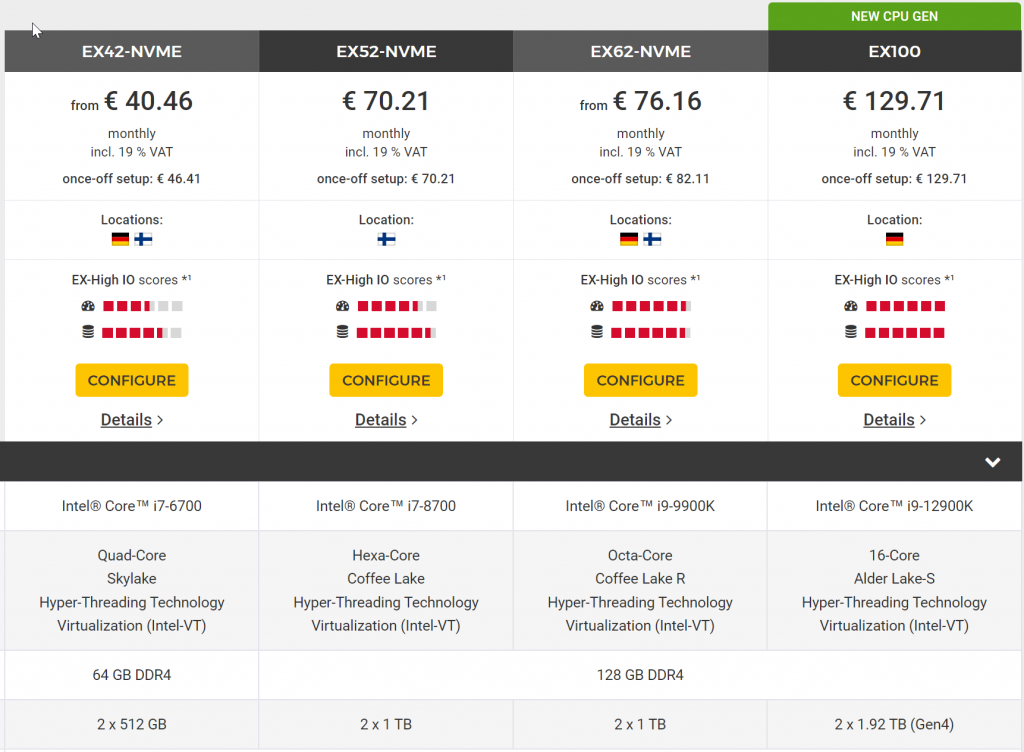

Buying faster hardware is an underrated alternative. Really fast servers are not expensive anymore, and dedicated servers are much faster than anything you can get virtualized on the cloud. Dedicated servers have their own downsides, I won’t deny that. Still, performance is just much less of an issue when you have a monolithic architecture that runs on a server with 32 cores and 500gb of ram and more NVME storage than you will ever need.

Algorithmic simplifications are often low-hanging fruit. Things get slow when data is processed and copied multiple times. What does a typical request look like? Data is queried from a database, json is decoded, ORM objects are constructed, some processing happens, more data is queried, more ORM objects are constructed, new data is constructed, json is encoded and sent back over a socket. It’s easy to lose sight of how many CPU cycles get burned for incidental reasons, that have nothing to do with the actual processing you care about. Simple and straightforward code will get you far. Stack enough abstractions on top of each other and even the fastest server will crawl.

Approximation is another undervalued tool. If it’s expensive, for one reason or another, to tell the user exactly how many search results there are you can just say “hundreds of results”. If a database query is slow look for ways to split the query up into a few simple and fast queries. Or overselect and clean up the data in your server side language afterwards. If a query is slow and indices are not to blame you’re either churning through a ton of data or you make the database do something it’s bad at.

Our approach

The kind of caching we think is mostly harmless is caching that works so flawlessly you never even have to think about it. When you have an app that’s read heavy maybe you can just bust the entire cache any time a database row is inserted or updated. You can hook that into your database layer so you can’t forget it. Don’t allow cache reads and database writes to mix. If busting the entire cache on a write turns out to be too aggressive you can fine-tune in those few places where it really matters. Think of busting the entire cache on write as a whitelisting approach. You will evict good data unnecessarily, but in exchange you eliminate a class of bugs. In addition we think short duration caches are best. We still get most of the benefit and this way we won’t have to worry that we become overly reliant on our cache infrastructure.

We also make some exceptions to our cache-weary attitude. If you offer an API you pretty much have to cache aggressively. Simply because your API users are going to repeatedly request the same data again and again. Even when you provide bulk API calls and options to select related data in a single request you’ll still have to deal with API clients that take a “Getta byte, getta byte, getta byte” approach and who will fetch 1 row at a time.

As your traffic grows you’ll eventually have to relent and add some cache layers to your stack. It’s inevitable but the point where caching becomes necessary is further down the road than you’d think. Until the time comes, postpone caching. Kick that can down the road.