Which stack to pick is a recurring topic, and we’re big believers in how dedicated hardware [1] is (still!) a great option, especially for bootstrapped (SaaS) startups.

A few people pointed out we use a few cloud services as well, so which is it, cloud or metal? And what exactly does our favorite stack look like then?

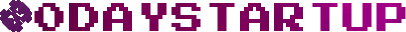

I like to call our stack CALM. The purpose of CALM is in the name: we want the most bang for buck while keeping things simple and boring. We’re a tiny team and want our SaaS to scale to many customers (potentially millions of users) without having to worry about complexity and cost. Let’s look at the different parts:

1U ought to be enough for anybody!

If you’re puzzled by the “one machine” part: yes, that’s how ridiculously cheap and powerful metal is these days.

For ~$150/month you can get an absolute beast of a machine, with 128GB of RAM, 2 TB SSDs and dozens of 5Ghz cores. You don’t pay for each byte of traffic, each cycle of CPU or a few GBs extra of RAM. It makes tiny cloud compute nodes look like a toy. It’s powerful enough you can run everything on one machine for a long while, so your architecture becomes super simple as this point. When things start to grow further, we’ll have plenty of RAM to cache queries and dynamic parts of the app.

Sprinkle on the cloud!

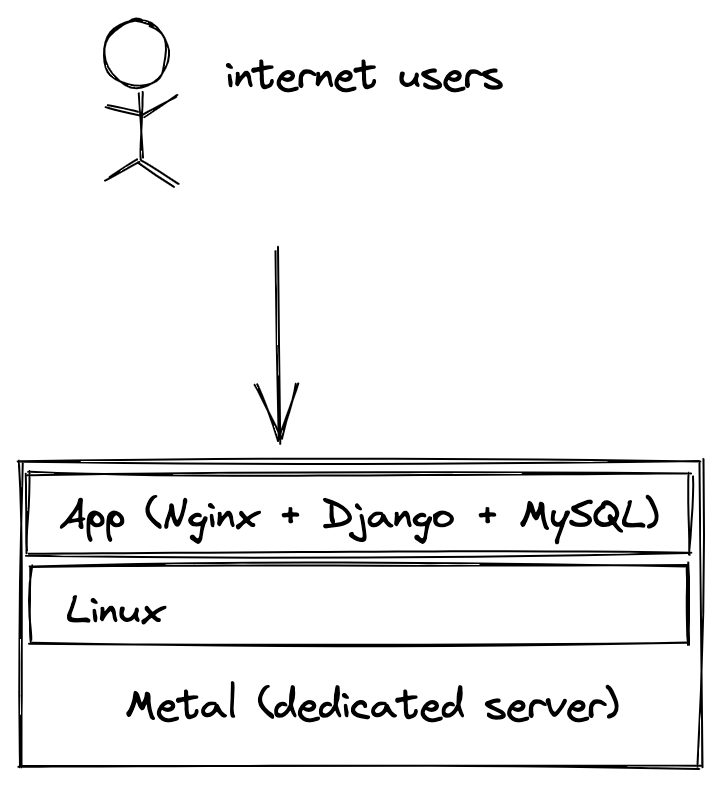

This already is enough to handle many thousands of users, no problem. But to make this even more robust, this is where we like to sprinkle a bit of cloud on top:

By using CloudFlare as a reverse proxy, we significantly reduce the number of requests which hit our server. It’s literally set and forget. We set up the CloudFlare proxy in their dashboard and firewall everything else off. It doesn’t add any real complexity to our stack. And it’s cheap (even free for what we need). We can cache and serve static assets from CloudFlare from a location close to the user. There’s really a lot of traffic you can serve this way.

Reliability through simplicity

Servers these days are super reliable. I like to compare it to ETOPS for planes. You no longer need 4 clunky engines, if you use new hardware.

By design, the entire stack has very few moving parts. It’s easier to set up, simpler to maintain, and cheap. The more parts, the more can break. No 3am magic automatic migrations, noisy neighbors, billing alerts, or having to refactor your app because some inefficient query is eating up all your profits in cloud costs, none of it.

Another advantage is that a small dev VM on our laptop can mirror the entire architecture, so even if we want to make changes to the server set up (which we hardly ever do), we can test the exact thing on our laptop.

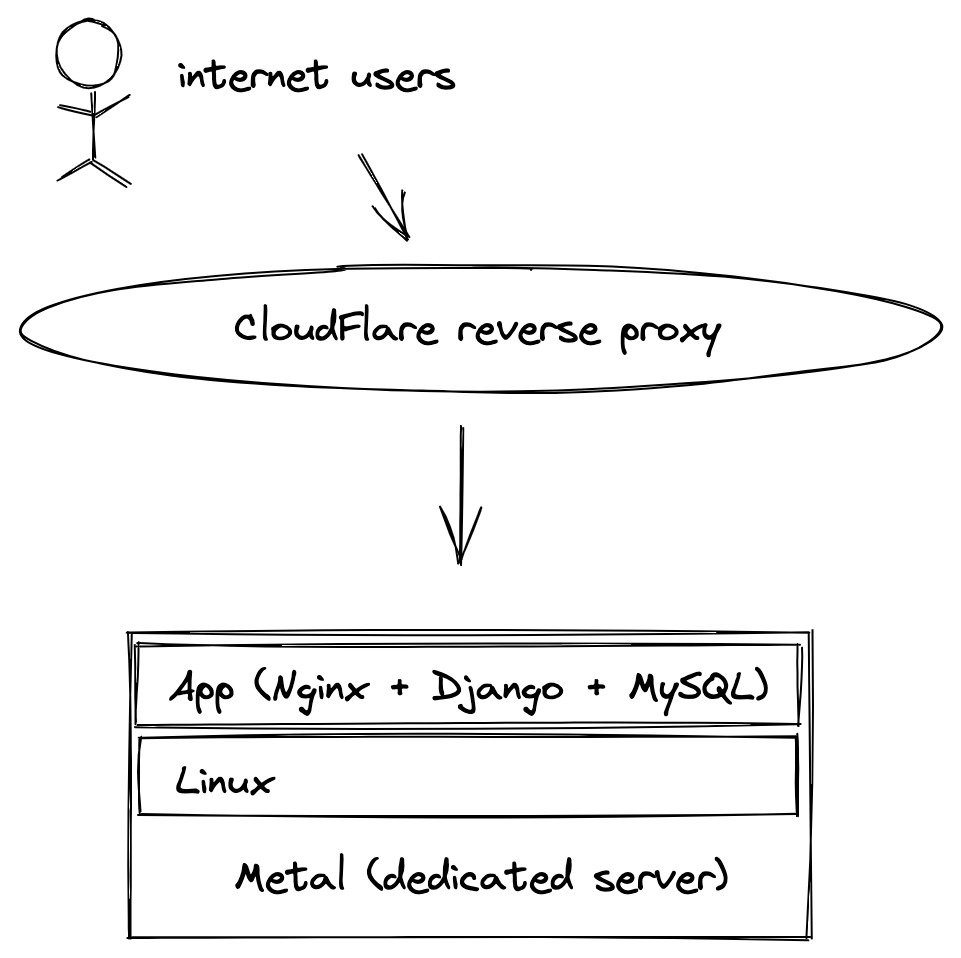

We’ve never had significant outages but of course, catastrophe can always strike, and that’s a fair point (and the cloud is not immune to these either!). You can find examples of an OVH datacenter literally catching fire. It’s very rare, but we want to be prepared!

When starting out we simply stored a backup of the entire server at a different location (again, cloud sprinkled on top). That’s OK, but in a worst case disaster we would have to spend half a day to restore things, which isn’t very calm. Because servers are so cheap, we’ve now simply added a completely identical mirror as hot standby in a datacenter in a different part of the world.

If something goes terribly wrong, we simply point the DNS to the other server. And in an even worse case we could even completely switch hosting providers and move our entire architecture somewhere else (not that we’ve ever had to deal with problems like these).

CALM

So there we have it, our CALM stack: Cloudflare (or other CDN/proxy) -> App -> Linux -> Metal.

Of course, some percentage of startups is going to have special requirements (petabytes of storage, 99.99999% uptime) and this won’t work for everyone. But when your database is only a hundred gigabyte or so and the whole thing fits into RAM, you don’t need to worry about “big data” problems. Similarly, lots of websites and apps can get away with a minute downtime per month (if that happens at all).

It will be a long time before we outgrow this architecture, which only costs about $500/month, and that means we can worry about the product and customers instead.

[1] Earlier this week, we wrote about how You Don’t Need The Cloud.